There are many consideration in selecting the features that are needed in order to discriminate different classes. Aside from individual discrimination features, the correlation among the various features should also be taken into account in choosing the suitable combination of features.

Class separability measures are important procedure to be used in feature selection. Different scatter matrices to be defined later are used to describe how the feature vector samples are scattered. Three classes were first generated assuming the following mean and variance parameters:

It can be illustrated more apparently the difference on how each class is dispersed in the scatter plot below.

$S_w$ and $S_b$ are within-class and between-class scatter matrix, respectively. Within-class scatter matrix measures how the samples on each class are distributed. With $\mu_0$ as the global mean vector, between-class scatter matrix defines how the classes are well separated to each other. Mixture scatter matrix $S_m$ calculates the covariance matrix of the feature vector with respect to the global mean. Below are the calculated matrices from the given three clusters.

From the scatter matrices, criteria, $J_1$, $J_2$ and $J_3$ can be computed

High values of the criterion arise when the samples of each class are well clustered around their mean and when the different classes are well separated. These criteria are used to optimally select the features. From the previous example, the variances are changed. Below are the parameters, scatter plot and the calculated criteria.

For this example, the classes are well separated and the samples were well clustered and it can be observed that criteria values are relatively high as compared to the previous example. The next example below has a higher variance thus the classes are overlapping and not well clustered.

As expected, the criteria are low in values.

Another criteria to describe the relation between two classes is the receiver operating characteristic (ROC) curve. This gives information about the overlap of the classes.

Suppose we have two probability density functions with overlapping distribution of a feature as shown in figure (a) below. For a given threshold illustrated by the vertical line, we consider class $\omega_1$ as the values on the left of the threshold and class $\omega_2$ as the values on the right. Let $\alpha$ be the probability of wrong decision concerning class $\omega_1$ and $\beta$ as the probability of wrong decision concerning class $\omega_2$. The probability of the correct decision is 1-$\alpha$ and 1-$\beta$ for class $\omega_1$ and class $\omega_2$, respectively.The ROC curve as shown in figure (b) can then be plotted by moving the threshold over different positions.

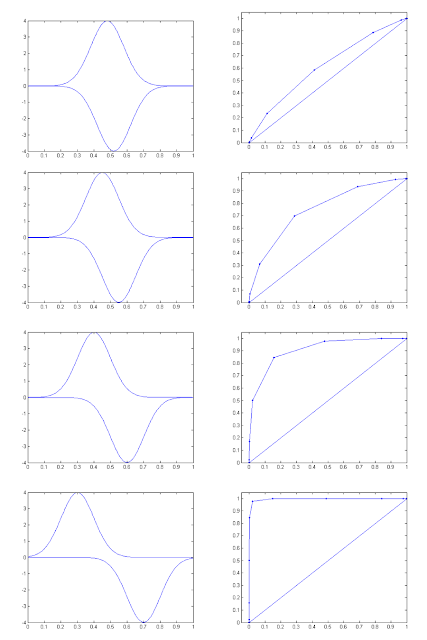

Consider the two arbitrary probability density functions with different separations of the two classes. The resulting ROC curves using the threshold from 0 to 1 with step size 0.1 are illustrated.

When there is a big overlap between the two classes, the area between the curve and the diagonal line is small and as we reach a complete overlap, the curve approaches the diagonal line. This is because if there's a complete overlap, we get $\alpha$ = 1-$\beta$ for any threshold values. Then as the two classes become well separated, the curve departs from the diagonal line thus the larger the area between them. For a completely separated classes, we expect that as the threshold moves any values of $\alpha$ will get 1-$\beta$ = 1.

--

Reference

[1] S. Theodoridis and K. Koutroumbas, Pattern Recognition, 3rd ed., Chapter 5, Academic Press, San Diego, 2006

--

Reference

[1] S. Theodoridis and K. Koutroumbas, Pattern Recognition, 3rd ed., Chapter 5, Academic Press, San Diego, 2006