Our fifth activity in AP 186 is another fun activity. We are asked to estimate the area of synthetic images and try to do the same on images of real places using Scilab programming language. At first, I remember those areas of study that my VIP friends talked about and that I am always fascinated in listening. I think I could not explain it well like they did but basically it's about capturing images of the sea floor because you wouldn't want to touch or maybe destroy the treasures of the sea. Then from the images, not only the area but also depth, surface roughness and other important information can be obtained. These things would enable them to know condition of the corals and the like and would help them preserve our sea treasures. These things just make me realize more the importance of image processing. So, I might have now start doing our task. :)

Have some background study first! Green's theorem is a way to determine the area of a closed path. It is equivalent to the equation [1]

The double integral on left side of the equation represents the area enclosed by a contour. We can relate this to line integral over the contour traversed in counter clockwise direction. In discrete form, area can be computed as follows [1]

where x and y are the collection of points that traces the contour.

Have some background study first! Green's theorem is a way to determine the area of a closed path. It is equivalent to the equation [1]

The double integral on left side of the equation represents the area enclosed by a contour. We can relate this to line integral over the contour traversed in counter clockwise direction. In discrete form, area can be computed as follows [1]

where x and y are the collection of points that traces the contour.

Now, for the activity proper..

First, synthetic images of square and circle were produced using the Scilab code (which is done in Activity 3). The images as a whole are both 100 pixels by 100 pixels but the size of the shapes vary. For circle, it has a known radius of 25 pixels and the square has a side equal to 50 pixels.

First, synthetic images of square and circle were produced using the Scilab code (which is done in Activity 3). The images as a whole are both 100 pixels by 100 pixels but the size of the shapes vary. For circle, it has a known radius of 25 pixels and the square has a side equal to 50 pixels.

|

| ode for simulating a square synthetic image (top) and circle synthetic image (bottom). Beside them were the resulting synthetic images. |

Using the function edge() of the scilab, the edge of the synthetic images were obtained. I encountered a problem where the code doesn't accept other images saying that the input image should be a single channel. The function only accept single channel images so you have to make sure to save the image as one channel. In using Paint, you have to choose the monochrome bitmap. Or you can also convert images to one channel in the code, I tried the function rgb2gray(), and it worked :). So moving on.. the edge() function needs an argument for the method parameter which can be 'sobel', 'prewitt', 'log', 'fftderiv' or 'canny' based on the Scilab help. The output images were shown

|

| Resulting edge of the circle using the different methods: sobel, prewitt, log, fftderiv and canny (from left to right). |

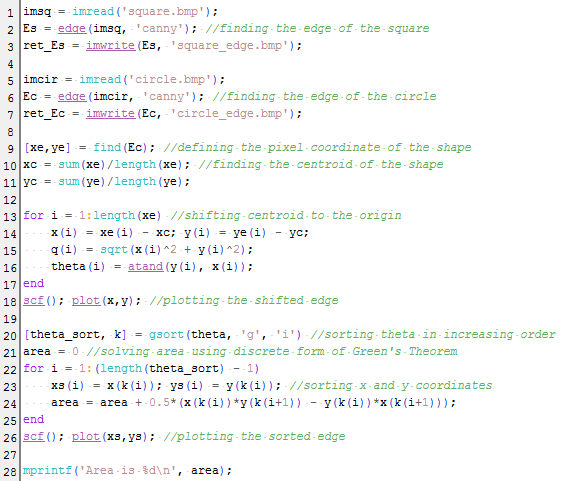

I choose best the 'canny' method because it outlines the edge with only thin line unlike others which are either thick or segmented. Then, the pixel coordinates of the edge are put into an array. The plot of the coordinates were shown in the figure below. However it was noticed that the coordinates were not sorted. To apply the Green's theorem, we want a sorted coordinates which traces off the edge of the shape. Sorting the coordinates with increasing angle would be effective. To do this, the centroid of the shape was found and the whole shape edge was shifted to the origin. The pixel position was then converted to their polar coordinates. Line 13-17 were designated to calculate the radius and angle values. Then, function gsort() was used to sort to increasing angle. What we really want to know is the arrangement of the indices of the angles to know which comes first, second, .. to last. We match this arrangement to the x and y coordinates. We can now apply the discrete form of the Green's theorem. Line 22-25 do the sorting and calculation of area.

|

| Plot of the unsorted (left) and unsorted (right) coordinates of the edge of circle. |

The complete code was also shown below

The area for the circle was obtained to be close to the expected value. To check the credibility of the code, I tried other synthetic images (make the size bigger, shifted the centroid of the shape, other shapes) as shown below

The summary of the expected and actual area and the percent deviation were tabulated below. This shows a significantly small difference to assume that the technique used is reliable in getting the area of an image.

Now, its time to put this in practical use. What if you want to know the area of a place? your favorite vacation destination? or even your house? I choose Lanao lake, which is the largest lake in the Philippines and its actual area can easily be googled to be 340 sq. km [2]. We would neep the map of Lanao lake, which was again taken from google maps. Using GIMP, the desired area was traced using magic tool and set similar to those of the synthetic images, black background and white shape. This is important since we are using the function edge(). The scale in the map was also used to relate the pixel to its physical area. From the code, the area was 59126.629 in sq. pixels. This can be converted to sq km using the scale found in lower left of the map about 66 pixel : 5 km. The computed area results to 339.34016 sq km with percent deviation of 0.19 to its expected area. We are now more convinced that this is an effective technique.

|

| The map of the Lanao lake on top (taken from google maps [2]), synthetic image tracing the lake (bottom left) and the edge simulated by scilab (bottom right). |

In doing this activity, I have encountered many problems concerning the technique and how to incorporate it to the code. For that, I like to thank with all my heart my classmate, colleague, and best of friends, Nestor Bareza for patiently helping me.

I think I would rate myself 12/10 since I've done the main goals and the bonus part of the activity :)

____________________

References

[1] Soriano, M. "Area Estimation for Images with Defined Edges." AP 186 Laboratory Manual. National Institute of Physics, University of the Philippines, Diliman. 2013.

[2] Lake Lanao. Retrived from http://en.wikipedia.org/wiki/Lake_Lanao

[3] Lanao Lake, Philippines. Retrieved from https://maps.google.com/